Convolutional Reverb. How and Why Does it Work?

Have you ever listened to a song and wondered how the sound is so rich and full? Or have you ever played a video game and been impressed by the immersive sound effects? One of the key elements in achieving these effects is reverb. Reverb is a sound effect that simulates the natural echo and reverberation of a space. It adds depth and dimension to audio recordings and enhances the overall sound quality and one of the most popular reverb algorithms is convolutional reverb or convolution reverb.

Convolutional reverb is a technique that has gained popularity in the field of audio processing and sound design. This method has become a preferred choice for many sound designers, musicians, and game developers due to its ability to produce realistic and natural-sounding reverberation.

The history of convolutional reverb can be traced back to the 1960s when digital signal processing was in its infancy. Early attempts to simulate reverb involved using analogue circuits and mechanical plates. However, with the advent of digital signal processing, it became possible to simulate reverb using mathematical algorithms. The first real-time convolution reverb processor, the DRE S777, was announced by Sony in 1999. Since then, convolutional reverb has become a widely used technique in the field of audio processing and sound design.

How does Convolutional Reverb work?

Before we dive into the nitty-gritty of convolutional reverb, let's first explore a similar concept that can help us build an intuition for understanding convolutional reverb later on. We'll refer to this as "pseudo convolutional reverb", as it's not exactly the same thing but will provide a useful foundation for understanding convolutional reverb.

Let's say we want to examine how a person's voice sounds in a particular setting, like a church or a room. To do this, we need to play a sound and collect a "fingerprint" of how that sound behaves in the environment. The idea is to use a sound that is as brief as possible while still including all the audible frequencies, up to around 22,000 Hz. This fingerprint will serve as a reference point that can be applied to other audio recordings to simulate the effect of that particular environment. To capture that fingerprint (it is called an Impulse Response) of an acoustic space, a short burst of sound is played in that environment. This sound can be a loud clap, a balloon pop, or any other sound that is sharp and transient.

The impulse response is then obtained by recording the sound of that burst using a microphone. This recording captures not only the initial sound but also the reflections, echoes, and reverberations that occur as the sound bounces off the surfaces in the environment.

The recorded sound is then analyzed using signal processing techniques to extract the impulse response. This process involves identifying the original sound burst in the recording and removing it, leaving only the echoes and reverberations. The resulting signal is then normalized to account for differences in microphone sensitivity and other factors.

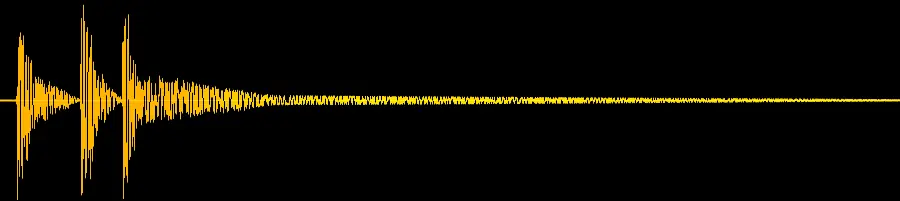

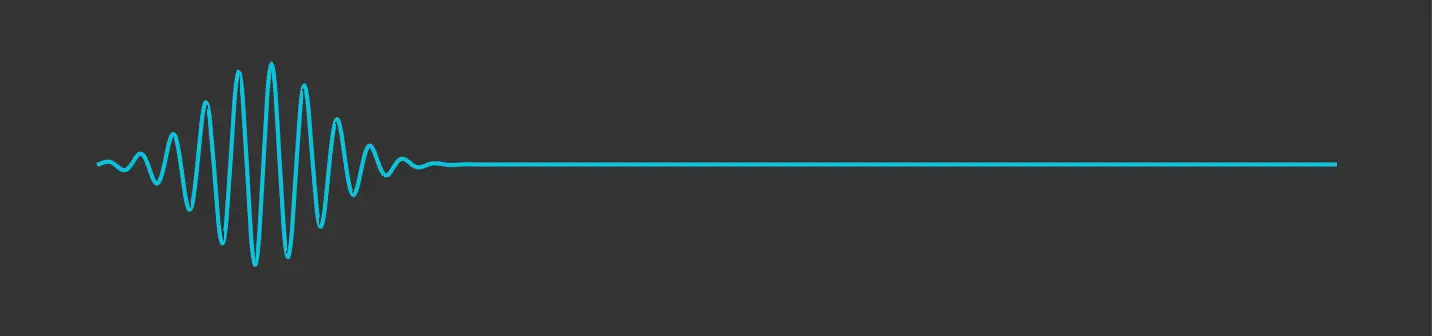

Fig. 1. displays the waveform of a clap signal. As the clap signal is too complicated, let's replace it with a simpler signal to make it easier to understand. However, the concept remains the same. Fig. 2 displays the simplified version of the signal which will serve as a source for later discussions.

Now, What does the speaker capture when we play a sound in a room,? Initially, it records the original audio signal, but what else does it record? It also captures the reflections of the sound as it bounces off the walls, ceiling, and other surfaces in the room. Take a look at the animation below (Animation 1.) to see what the resulting recording looks like.

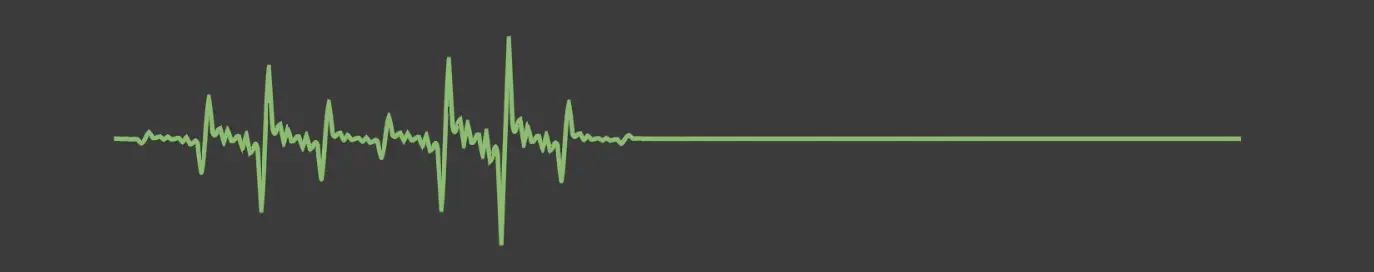

If we take a closer look at the recording, we can see that the original signal repeats a few times. What this means is that for each reflection from the walls or ceiling, a lower amplitude version of the same signal is present in the recording with a certain delay. This is significant because it indicates that the recording contains enough data about the room's acoustics, particularly how sound behaves in it. With this information, we can create a "fingerprint" of the room. To do this, we have to remove the original signal from the recording along with all of its repetitions and replace them with a constant value that indicates how much of the original signal exists in the recording with a certain delay. Take a look at Animation 2. to see how we can obtain a fingerprint from the recording.

Now that we have this fingerprint, we can use it on any other audio. But first, let's understand what the fingerprint tells us. The graph shows zero values except at the points where the resulting recording contains the source signal with the same delay and amplitude. The value of the first peak is 1, indicating that the original signal exists in the resulting recording as it is. The smaller spikes at 10ms, 20ms, 30ms, and 40ms indicate that the same signal was recorded at those times but with lower intensity (0.3, 0.2, 0.15, 0.1 in this case). This information allows us to apply the fingerprint to any other audio using the following steps:

1. Generate blank audio as the result

2. Add the original audio to the result (the first peak in the fingerprint)

3. For each peak, shift the audio with the corresponding milliseconds, adjust the intensity (by multiplying with the scalar value) as in the fingerprint, and add it to the result.

Let's say that we want to apply this fingerprint to the signal shown in Figure 3 (see below). Check out Animation 3 to see a visual representation of the process described above.

Great job! Now, we have our signal with the reverb of the room applied to it. Looking at it from a different angle, what does each sample contain after the fingerprint has been applied? It has the value from the original signal, as well as the value from 10ms before with a coefficient of 0.3, the value from 20ms before with a coefficient of 0.2, and so on for every peak in the fingerprint. Essentially, the fingerprint allows us to recreate the reverb of the room by adding delayed and attenuated versions of the original signal to itself. The following steps describe the result from that perspective. To compute the i-th sample of the resulting signal, the following steps can be taken:

1. Take the original value from the input signal.

2. For each peak in the fingerprint, calculate the delay of that peak in samples.

3. Add the sample from the original signal that is the calculated delay before the current sample, multiplied by the coefficient from the fingerprint.

4. Repeat step 3 for each peak in the fingerprint.

In essence, this approach recreates the reverb of the room by adding delayed and attenuated versions of the original signal to itself, resulting in a more natural and realistic sound.

But what is convolution?

Convolution is a mathematical operation that is commonly used in digital signal processing to combine two signals into a third signal that represents how one of the original signals modifies the other as it passes through it. The basic idea behind convolution is to flip and slide one signal (often referred to as the 'kernel') over another signal (often referred to as the 'input signal') and calculate the sum of the product of the overlapping portions of the two signals at each point. And this is precisely what we need to add reverberation to our sound signal.

Check out Animation 4 for a simple example. In this example, the input signal only has one spike at the beginning and the rest are zeros, which means silence. For the kernel, we will use the same fingerprint that we used before. The animation will show you the sliding process and how the convolved signal is generated. Pay attention to the resulting signal, which will have the same spike at the beginning, followed by a few "echoes" of that spike later on exactly as it is "given" on the kernel.

Now, let's take a look at a more complicated example in Animation 5. This time, the input signal contains two sounds, the first in the beginning and the second one a few seconds later. Check out the resulting signal. It will have the first sound as it is, then a few "echoes" of the first sound, followed by the second sound, but with a little bit of echo from the first. After that, the second sound will start to reverberate until it eventually becomes silent.

Check this video for a better understanding of what convolution really does.

The Real Convolutional Reverb

We are now getting closer to comprehending what convolutional reverb does. In actual convolutional reverb, we use a different representation of the room called Impulse Response instead of the fingerprint we used earlier. This Impulse Response is also obtained through the recording of a clap in that specific room. I will not delve into the specifics of how this Impulse Response is constructed, but you should have enough understanding to grasp the concept. In a future blog post, I hope to describe this process in more detail.

Here is a basic 8-line Python code that demonstrates how we can implement an impulse response on speech signals using torchaudio.

import torchaudio

import torchaudio.functional as F

# Load the impulse response and normalise

rir_raw, sample_rate = torchaudio.load('path/to/impulse/response')

rir = rir_raw[:, int(sample_rate * 1.01) : int(sample_rate * 1.3)]

rir = rir / torch.norm(rir, p=2)

# Load the speech

speech, _ = torchaudio.load('path/to/sppech')

# Convolve speech with room impulse response

speech_with_reverb = F.fftconvolve(speech, rir)

torchaudio.save('path/to/speech-with-reverb', speech_with_reverb, sample_rate)Conclusion

In conclusion, we have covered the fundamental idea behind convolutional reverb, which is a technique used to add depth and dimension to audio recordings by simulating the natural echo and reverberation of a space. We have seen how convolution works and how it can be applied to create a realistic reverb effect in a particular acoustic environment. This technique is computationally cheap and easy to use, making it a preferred choice for many professionals in the audio industry. Understanding the basic algorithm of convolutional reverb will enable us to use this technique more effectively and create high-quality audio recordings. As with any technique, there are pros and cons to using convolutional reverb.

One of the major advantages of convolutional reverb is its ability to produce realistic and natural-sounding reverberation effects. It is also computationally cheap and easy to use, making it a preferred choice for many sound designers, musicians, and game developers. Convolutional reverb can be used to create immersive soundscapes that enhance the overall sound quality of audio recordings.

However, one of the major disadvantages of convolutional reverb is the need for a high-quality impulse response to accurately capture the acoustic characteristics of a particular environment. Obtaining an accurate impulse response can be time-consuming and requires specialized equipment. Additionally, convolutional reverb can be limited in its ability to manipulate and shape the reverb effect.

Overall, understanding the fundamental idea of convolutional reverb can help to better understand the exact algorithm and use this technique more effectively. With its ability to produce realistic and natural-sounding reverberation effects, convolutional reverb will continue to play a significant role in shaping the future of audio technology.

References

https://wallpaper.dog/digital-art

https://www.youtube.com/watch?v=KuXjwB4LzSA

https://freesound.org/people/NoiseCollector/sounds/3718/

https://pytorch.org/audio/main/tutorials/audio_data_augmentation_tutorial.html#simulating-room-reverberation

https://en.wikipedia.org/wiki/Reverb_effect